Are you a former HapYak customer and managing your migration to our new Native Video Cloud interactivity module?

Visit our support documentation to guide you through the process.

Are you a former HapYak customer and managing your migration to our new Native Video Cloud interactivity module?

Visit our support documentation to guide you through the process.

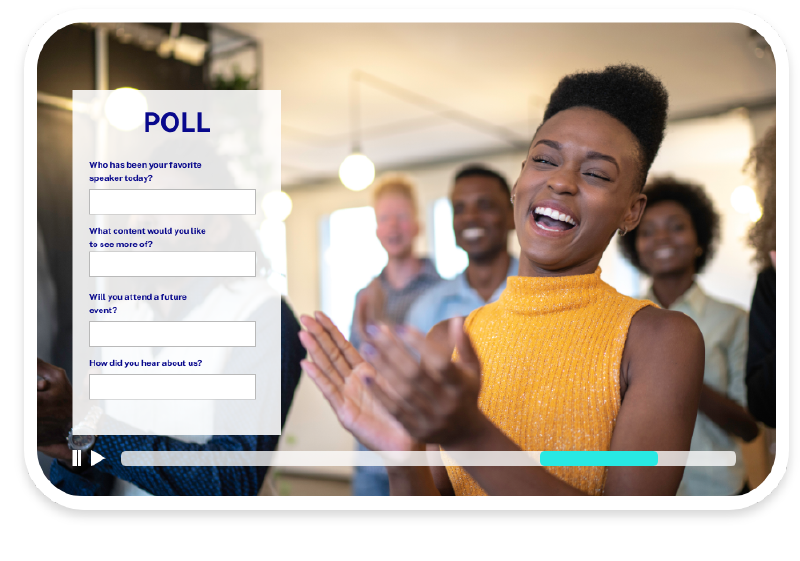

Engage audiences on a deeper level

Native Video Cloud Interactivity features turn viewers from passive watchers into active participants for deeper engagement and stronger connections. Our suite of customizable options makes it easy to add polls, quizzes, shopping carts, personalization, chapters, links, and more to your video. You’ll gain more conversions when you take your viewers from leaning back to leaning in.

Want to boost your video engagement with interactivity? Start by downloading our datasheet.

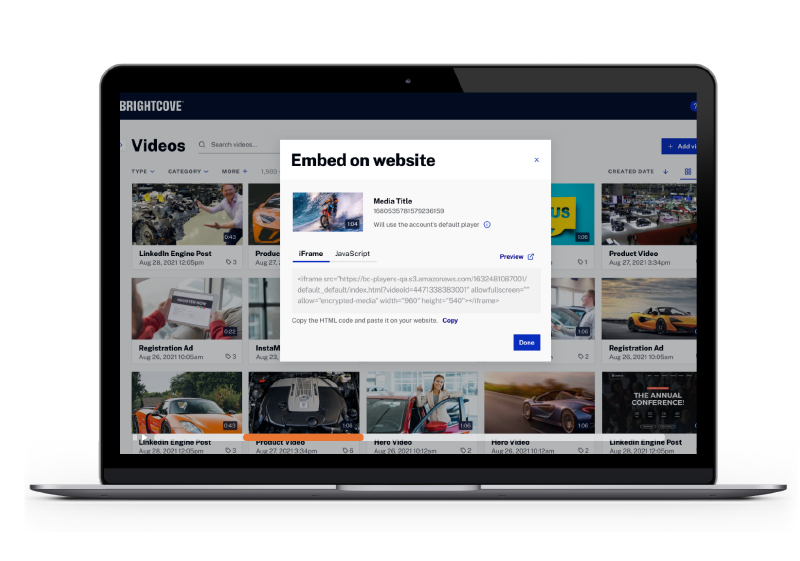

Customize one video or thousands quickly and easily

You can add branded interactive features to your videos at scale in just a few clicks with easy-to-use drag-and-drop tools. With Brightcove Interactivity, it’s simple to make a big impact on your business — no IT skills required.

Learn more about video interactivity — here’s a great introduction.

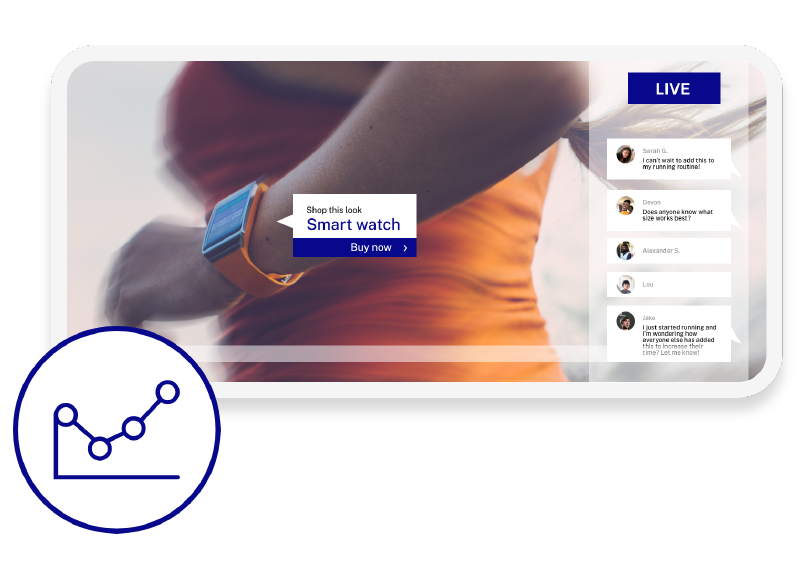

Drive ROI with personalization

Supercharge your video strategies with features that provide personalized experiences and encourage direct engagement

Learn how interactivity is the future of e-commerce video.

Engage your live audiences, too

Brightcove Live Interactivity lets teams easily deliver interactive experiences to increase live audience engagement.

See what’s possible in real time — download our Live Interactivity datasheet.

Learn how various interactive features can impact your strategy.

Experience the power of interactivity

Explore our interactive HTML5 video gallery and see how you can use in-video chapters, links, CTAs, shopping carts, personalization, forms, quizzes, polls, templates, and other customizable types of video annotation to boost your audience engagement.

See what’s possible — visit the Brightcove Interactive gallery.

Still haven't found what you're looking for?